Mura On Premise Installation

Mura Hosting Environment

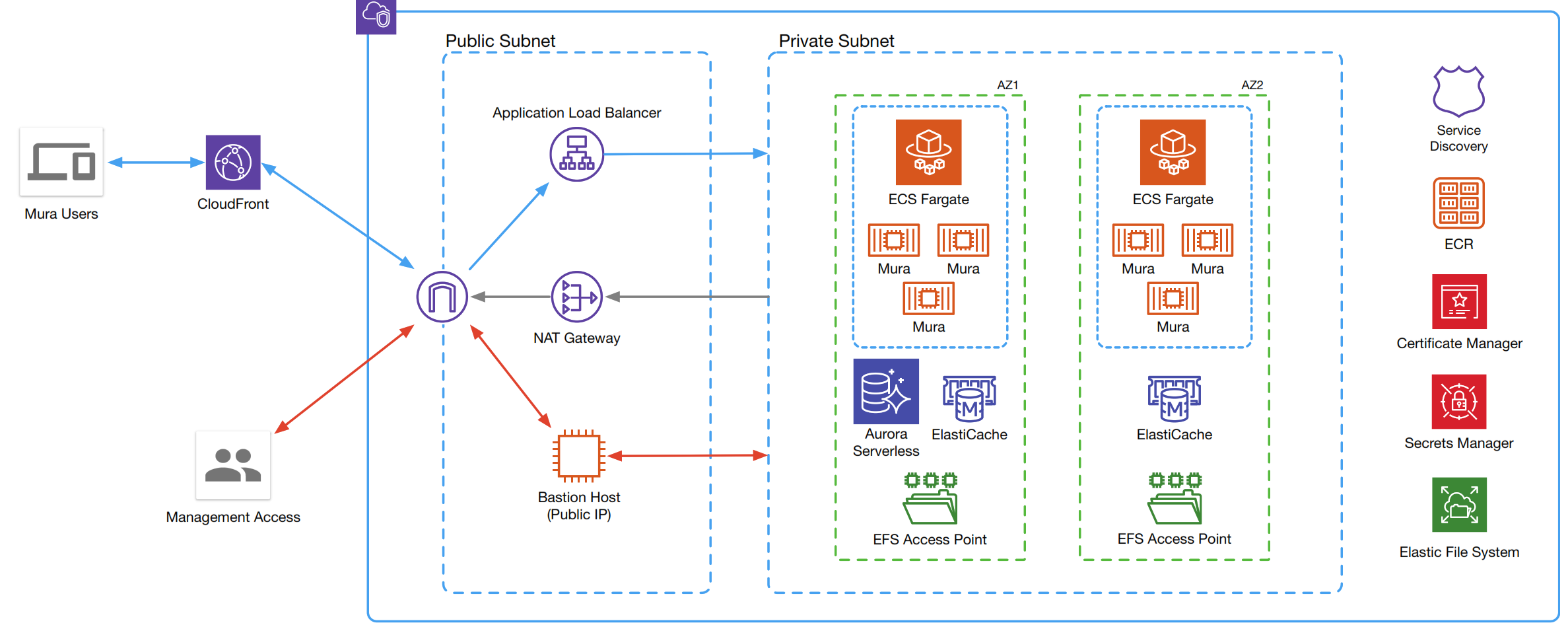

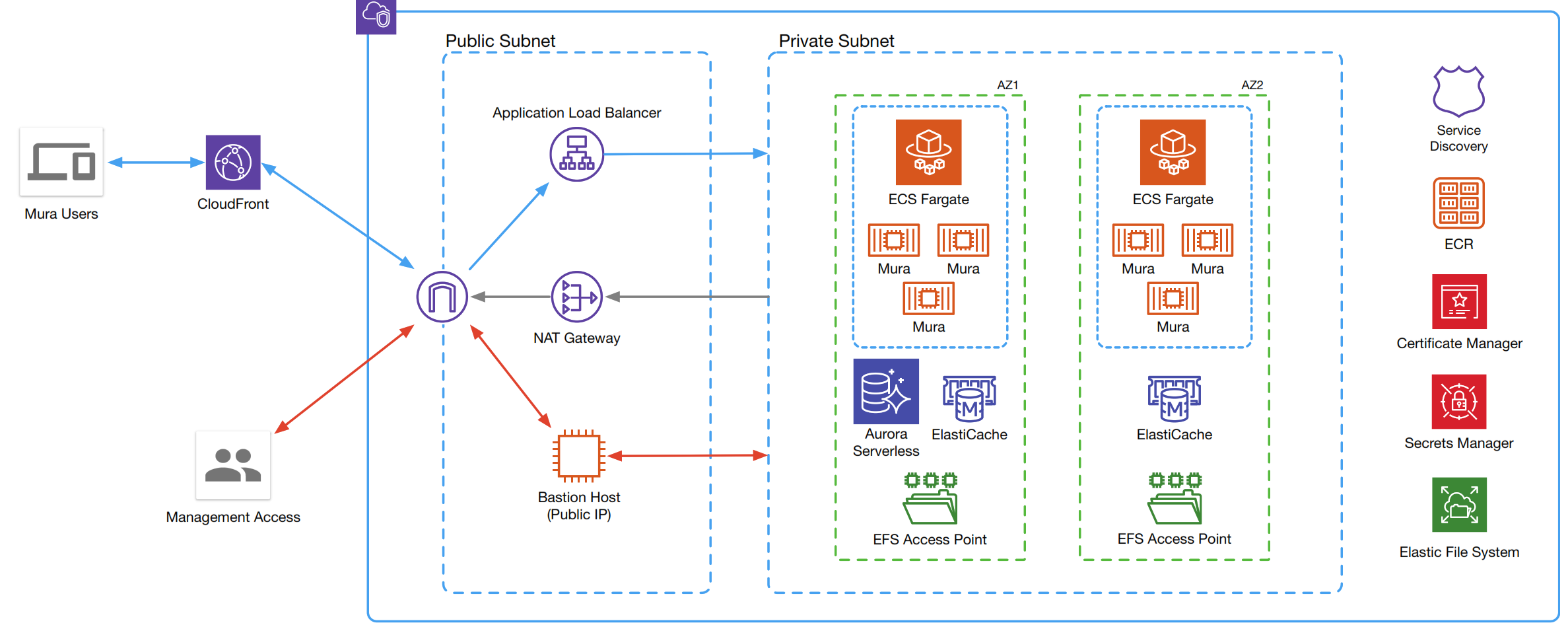

The Mura hosting configuration creates a secure, redundant, self-healing Virtual Private Cloud (VPC) via Amazon Web Services (AWS) and a host of Amazon- and integrated 3rd-party solutions.

VPC lets you provision a logically isolated section of the AWS Cloud where you can launch AWS resources in a virtual network. Web servers and application servers in the VPC can leverage Amazon EC2 elasticity and Auto Scaling features to grow and shrink as needed.

Every Mura VPC is private and dedicated solely to the customer.

Route53 with Service Discovery is used to provide communication between the Mura Docker containers, which allows for multiple containers and multiple services. Along with its own Service Discovery, each VPS also has its own private Certificate Manager for managing SSL and other certifications; a Secrets Manager for separating API and other private keys/passwords from deployed repositories, and an Elastic File System for file storage.

Mura Configuration

Mura is instanced via Docker containers. Docker “containerizes” all the services required to spin up an instance of Mura, including web server, database and application engine. Many of these services are themselves Docker Images, integrated into the instance via the “compose” file.

The Mura Docker container will hold the latest official Mura Image, with options to use the Lucee or Adobe CF2016 based upon the CommandBox Docker Image, or the official Lucee Docker Image. The Docker compose may optionally include references to other images such as a mail server, Elastic Search or other integrated services.

The Mura Docker “compose” file contains references to the above images, as well as the environment variables needed to complete the installation.

There are several other configuration files that are used in a “deployed” environment such as the VPC. You can view an example of these in a GitHub Gist. These include configurations for Mura itself, NGINX, and Docker.

cfapplication.cfc (example)

The cfapplication.cfc is the base configuration for the Mura instance and other (i.e. Docker compose) environment variables. Separate configurations can be used for staging (review) and live (production), for instance.

this.ramBase = {

class: 'lucee.runtime.cache.ram.RamCache'

, storage: false

, custom: {

"timeToIdleSeconds":"3600",

"timeToLiveSeconds":"10800"

}

, default: ''

};

//This must be done per site

this.cache.connections["default-data"] = duplicate(this.ramBase);

this.cache.connections["default-output"] = duplicate(this.ramBase);

In the example above, the RAM allocation for the application engine is adjusted.

NGINX default.conf (example)

NGINX can be used in a variety of ways, from load balancing to caching. One specific way we use it is for proxying our AWS S3:

set $s3 "s3host";

set $bucket "s3bucket";

set $proxy "proxyhost";

If you view the example file, you will see how these variables are applied to proxy the AWS storage.

Dockerfile.mura (example)

These is the command-line instructions for assembling our Mura Docker image. In this file we run startup parameters for the cfml engine, integrate Fusion Reactor, and importantly run a health check on the image:

HEALTHCHECK --start-period=3m CMD curl --fail http://localhost:8888/?healthcheck || exit 1

If this test fails, the process will halt until the issue is resolved. This ensures that new images pass the Mura Unit Tests.

Dockerfile.nginx (example)

This script runs during the assembly of the NGINX Docker Image. As with the NGINX default.conf (see above), this establishes the proxy settings for the deployment.

Docker-compose.yml (example)

The docker-compose file runs when the Mura Docker instance is spun up, identifying all the of required Images and their general configurations.

There are several key integration points here. The first is with Mura environment variables, which translate directly to the settings.ini.cfm file:

environment:

MURA_ENVIRONMENT: local

LUCEE_JAVA_OPTS: "-Xms512m -Xmx1536m"

MURA_DATASOURCE: ${dbname}

MURA_DATABASE: ${dbname}

MURA_DBTYPE: mysql

MURA_DBUSERNAME: ${dbusername}

MURA_DBPASSWORD: ${dbpassword}

You will notice the relationships between the two files and their variables. Also note that several of these have ${variable} string variable-type markers throughout. This is to prevent having passwords stored within the repositories. During deployment, these variables can be substituted with real values. Internally, Mura uses Buddy.Works and their pipeline deployment process to replace these placeholders with actual values. The same would be true for the AWS Secrets Manager and other pipeline processes.

Mura in the VPC

Mura instances are spread across AWS Availability zones. Each zone is configured with Auto-Scaling that will scale-out based upon requests-per-target or CPU usage. It will likewise scale-in to reduce costs when not utilized.

Each availability zone uses Fargate as the capacity provider, along with the Amazon Elastic Container Service (ECS) as the orchestration service for the Mura Docker containers. Fargate Spot can also be utilized to provide a low cost option for fault tolerant applications.

Each zone contains an access point to the Elastic File Server, and ElastiCache is used to provide persistent Session storage, allowing for seamless scale-in and scale-out events.

Aurora Serverless is used to provide automatic failover and automatic scaling based on application load (based upon MySQL 5.6 or 5.7).

Public Network

Amazon CloudFront is the public-facing, global-distribution CDN for the VPC, with Mura and its assets as the origin. This insures low latency, high resiliency and high transfer speeds across all locales.

Behind this is the public subnet. An Elastic Load Balancer using the AWC Certificate Manager to provide secure connections across the redundant availability zones. A NAT gateway provides outgoing access for the Mura containers. The “Bastion” jump host provides direct access for operations or the server management team (see Private Network, below).

Private Network

The private network is placed across multiple availability zones to provide high availability and redundancy.

The private network is placed across multiple availability zones to provide high availability and redundancy.

Bastion (aka “jump”) servers act as an extra layer of security by keeping containers isolated from the public internet. Direct access to containers by server operations or management can be achieved via the Bastion host. It can also be configured to allow access via other AWS services such as AWS Direct Connect or AWS Client VPN connection.

The private network is placed across multiple availability zones to provide high availability and redundancy.

The private network is placed across multiple availability zones to provide high availability and redundancy.